TL;DR

- Yes, it is possible to run open source models on your own infrastructure to help speed up product development.

- No, it will not help you as much as Claude3.7 does and it will be ten times more expensive.

- Rent a H100 on-demand and run on it the strongest model you can fit on it. It will give you nice token generation speed and it will be useful to do the grunt work and vibe/pair programming.

Disclaimer - Why would you want to do this?

Valid reasons:

- You may be working on a regulated industry and your TISO does not allow sending any code to any AI provider.

- You may working on an isolated network where all traffic is capped.

- To run models with their full feature set.

To have funTo learn and experiment.

Wrong reasons:

- Save money

- Get better faster stronger generation

You need to be aware of the limitations of running your own setup. The infrastructure of the big players is far from what you can reasonably setup for you or your company. If the quality the open source models deliver or the token generation speed is not enough for you, you are expecting too much of what you can cost-effectively run on your own hardware.

Choosing hardware ($$)

LLMs will happily eat all CPU and VRAM you throw at them. You need to find a effective compromise between cost, quality (model size - VRAM) and token generation speed (GPU inference speed). For smooth coding aim for 30 tokens per second to keep frustration at bay.

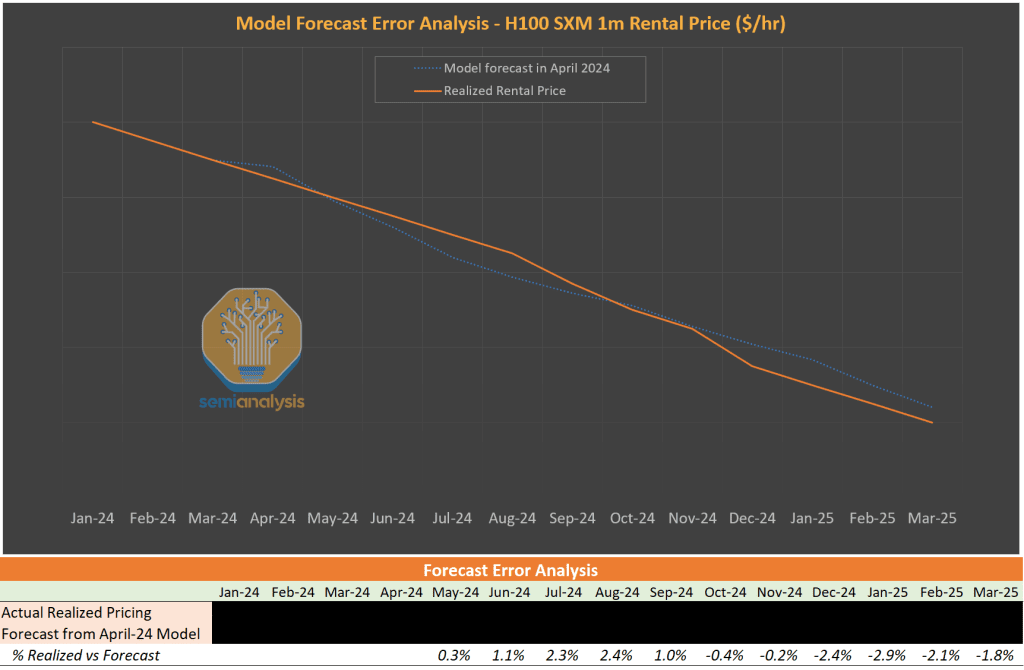

The cost of hardware is going down very quickly and it continue to do so for the foreseeable future [1]

When I compare GPU rental companies I focus on a specific product, the cost per hour of a single basic H100SXM. I find the H100 GPU the most reasonable GPU you can rent for personal use at the current price point. It is a very popular GPU on all providers. It will continue to be the GPU to use until the market gets flooded by the GB200 GPUs.

What am I expected to pay?

Around 2USD/h today. Falling under 1USD/h by EOY. Prices show significant disparity on different platforms. You will need to do some price shopping. Renting GPUs on the big providers aws, gcloud, azure is very expensive. Specialized GPU rental companies like runpod, nebius, lambda, hyperbolic have tighter margins but may lack the reliability, safety or certifications your business needs.

I’m a Hetzner fanboy. Why not Hetzner for this? Their highest offering comes with 1 RTX6000 ada that with its 48Gb VRAM will not handle 32B models.

Make sure that the hardware you choose

- Has barely enough VRAM to fit the model and your context and has compute enough to generate tokens at a reasonable speed

- Costs are reasonable for personal use or when shared on a small team

Lets do it

On this example I will rent a container with a H100GPU on runpod and we will run vLLM on it to get an openAI compatible API we can tell our tools to use. For this interactive use case I don’t recommend serverless so we will need to have a VM up we can directly.

Rent a machine running vanilla ubuntu, with one H100 and at least 120GB of disk space (The models use a lot of disk space). Once the machine is ready we first needs to install cuda.

On your machine prepare a couple of environment variables to ease the communication with the node.

export RUNPOD_ID=XXXXXXXXXX;

export RUNPOD_SSH_REDIRECT=XXXXXXXX;

ssh ${RUNPOD_ID}-${RUNPOD_SSH_REDIRECT}@ssh.runpod.io

SSH’ on the machine and run this to install basic tooling and CUDA:

apt-get update && apt-get install -y sudo python3-pip python3-venv git wget curl btop nvtop ncdu; wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2404/x86_64/cuda-keyring_1.1-1_all.deb; dpkg -i cuda-keyring_1.1-1_all.deb; apt-get update; apt-get install -y cuda-toolkit;

Installing CUDA will take a while. Once ready I setup a couple of environment variables for vLLM.

export HF_TOKEN=hf_xxxxxxxxxxxxxxx; # This is your HuggingFace API token. vLLM will use it to download the model. If the model you want to use require registration you will need first to ask for it on hugging face website. export GPU_AMOUNT=1; # Amount of GPU cards

Now we install vllm

python3 -m venv vllm_env && source vllm_env/bin/activate; pip install --upgrade pip; pip install -vvv vllm; # This will take a very long time

Now we are ready to run vLLM with our model. But what model?

Model Showdown

My gold standard is Anthrophic Claude Sonnet:

- It understands what I want without advanced prompt tricks

- It tightly follows the instructions on the prompts so the responses are palatable by tools

- They have awesome support for Model-Context-Protocol tools

- It is very useful

But of course we cannot run it on our own hardware.

I played with the following models: microsoft/phi-4, deepseek-ai/DeepSeek-R1-Distill-Qwen-32B, Qwen/Qwen2.5-Coder-32B-Instruct, deepseek-ai/DeepSeek-Coder-V2-Lite-Instruct, TheBloke/deepseek-coder-33B-instruct-AWQ, Qwen/Qwen2.5-Coder-32B-Instruct, Qwen/Qwen2.5-14B-Instruct-1M, unsloth/Qwen2.5-Coder-32B-Instruct-128K, Qwen/Qwen2.5-1.5B-Instruct, deepseek-ai/deepseek-coder-1.3b-base, deepseek/deepseek-r1-distill-qwen-32b, NovaSky-AI/Sky-T1-32B-Preview

I don’t recommend to do this. It is a waste of time. In the list there is a mix of small and mid size models. Some could fit in a single A40 card, others need to run on multi card setup. I tried different combinations of cards using RTX6000, L40, H100 cards etc. I constrained myself to Nvidia/CUDA products. The tiny models that can run in simple hardware are not of much help.

The Qwen2.5-Coder-32B is IMHO, over 6 months from its release on HF, still the best open source model you can use to help you with your code. It understands what you want, it communicates with Aider well and it generally produces useful code. It is also a good compromise as it can run the full model on a single H100 GPU at 35t/s

A couple days ago Deepseek-V3-03024 was released and it seems to be the current best option. Deepseek R2 will land in May and is going to be nuts. By the time you read this there will be newer, better models. Actually the trend now is to use TWO different models, one to plan the changes and another to actually code it.

If you want to see an overview of models checkout https://lifearchitect.ai/models-table/ and if you want to see what other people are using check the aider leaderboard / discord.

To run your chosen model you may need mystical guidance. There are many esoteric parameters you can introduce to make sure the model and the context size you chose fit on your GPU. It’s unclear to me how to consistently determine the best parametesr for you. It looks like a matter of trial and error.

This is what I use at the moment:

export VLLM_API_KEY=$(python -c 'import secrets; print(secrets.token_urlsafe())') echo $VLLM_API_KEY python3 -m vllm.entrypoints.openai.api_server --model Qwen/Qwen2.5-Coder-32B-Instruct --port 8888 --trust-remote-code --dtype bfloat16 --gpu-memory-utilization 0.98 --max-model-len 32768

Example of other models I run:

# python3 -m vllm.entrypoints.openai.api_server --model NovaSky-AI/Sky-T1-32B-Preview --port 8888 --trust-remote-code --dtype bfloat16 --gpu-memory-utilization 0.98 --max-model-len 32768 # It is good, maybe a little bit better than qwen2.5, but quickly starts with never ending repetitions. It is slower generating tokens.

# python3 -m vllm.entrypoints.openai.api_server --model deepseek/deepseek-r1-distill-qwen-32b --port 8888 --trust-remote-code --dtype bfloat16 --gpu-memory-utilization 0.98 --max-model-len 32768 # It works and reasons very well, and it can do commits on the codebase. Good for planning, but terrible at coding. It generates tokens around 35t/s

# python3 -m vllm.entrypoints.openai.api_server --model deepseek-ai/DeepSeek-Coder-V2-Lite-Instruct --port 8888 --tensor-parallel-size ${GPU_AMOUNT} --trust-remote-code # It does not output good quality. It also has trouble with aider

# # python3 -m vllm.entrypoints.openai.api_server --model TheBloke/deepseek-coder-33B-instruct-AWQ --port 8888 --tensor-parallel-size ${GPU_AMOUNT} # It works really well when it does, but it does not return consistently output that aider understands

It will take a long time to launch, download the model and make the API ready. Once the model is ready to receive requests, we need to prepare our tools.

The tools

LLMs know all the fine details and can give you good solutions for complicated problems if you describe the problem carefully, but often you will need to steer the model on the correct direction when it gets confused with basic stuff. To grease the relationship with the machine we need a UX that help communicate with the model and reduce frustration.

Tools are key. It is eye opening for developers when they first see the machine reasoning improvements and modifying their code.

Tools I recommend are Aider, VsCode+Cline, Windsurf or Cursor. All of them support MCP servers. MCP is the way to hook your AI with your machine or with other services. It will allow your AI scan your codebase, search on the web, use templates to create UI components, pick up data from your databases, manage your repository, create pull requests, make improvements after running run security checks (https://github.com/semgrep/mcp), or directly doing pentesting (https://github.com/athapong/argus).

For example with aider:

export OPENAI_API_BASE=https://${RUNPOD_ID}-8888.proxy.runpod.net/v1

aider --model openai/Qwen/Qwen2.5-Coder-32B-Instruct --model-metadata-file ~/.aider.model.metadata.json

What models are you running? Share your setup!

[1] Source: SemiAnalysis